How to use AWS S3 for persistence in Kubernetes

We recently deployed a Kubernetes cluster based on KubeSpray at a client. Since there is no out of the box cloud storage, one of the first task to clear for the cluster deployment is the data persistence.

We gave sshfs a try, and then thought we could actually also manage the distributed persistence using Goofys. Goofys allows you to simply mount an AWS S3 bucket as a file system. In effect, all the S3 data is synchronized between the different nodes of the cluster.

This post shows the few steps and configuration files to deploy a running iccube pod using Goofys as the pod persistence.

First let’s check the nodes of the Kubernetes cluster

kubectl get nodes

NAME STATUS ROLES AGE VERSION

akita Ready <none> 9d v1.25.4

aomori Ready control-plane 29d v1.25.4

hokkaido Ready control-plane 29d v1.25.4

iwate Ready <none> 29d v1.25.4

miyagi Ready <none> 29d v1.25.4

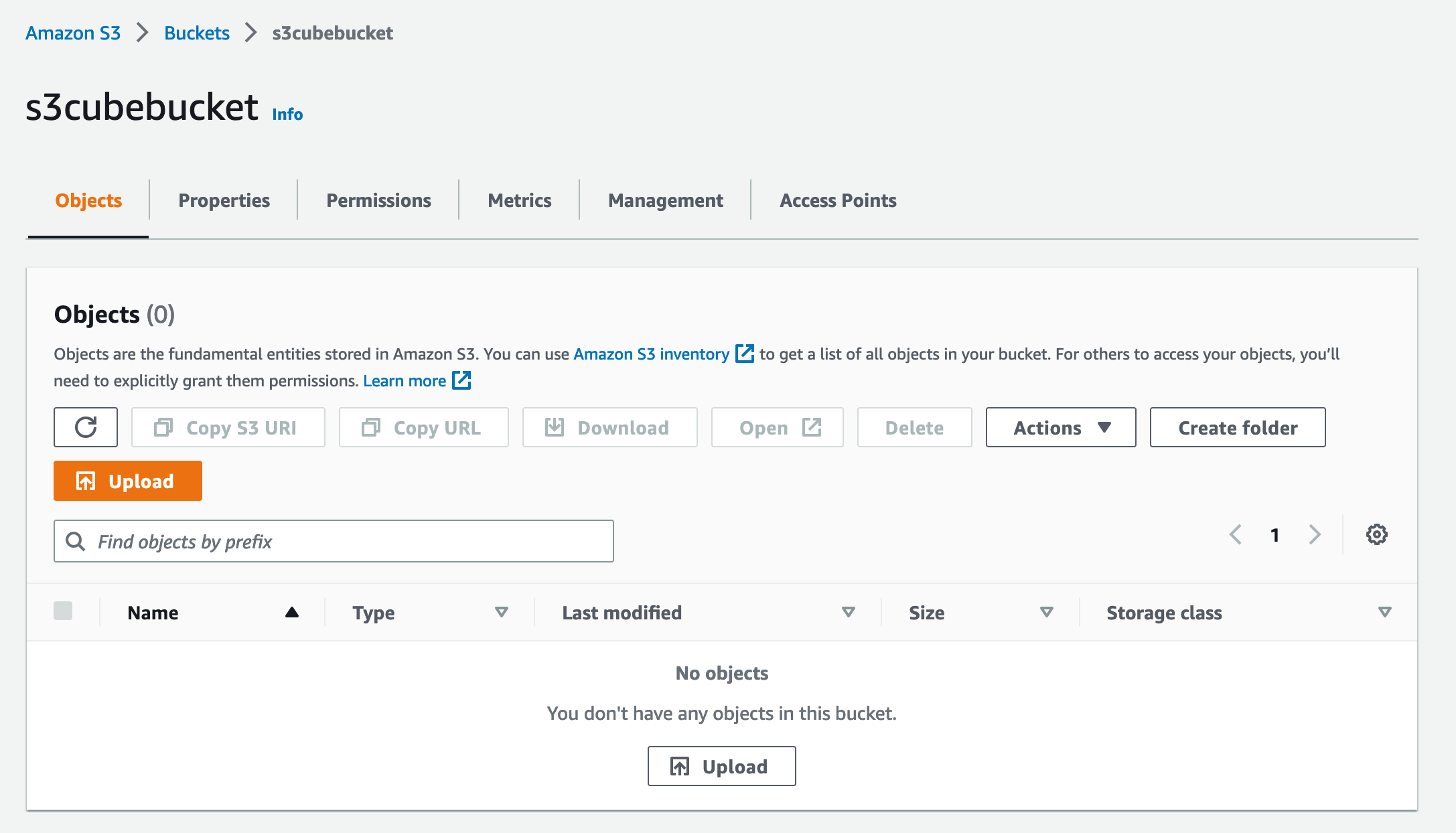

Let’s make sure we have the s3 bucket, named for this article s3cubebucket, to mount our files:

And let’s run this ansible task to deploy the AWS credentials,. The goofys binary and mount the goofys filesystem directly on the non-control-plane nodes.

---

- name: create .aws directory

file:

path: '/root/.aws'

state: directory

- name: copy aws credentials

ansible.builtin.copy:

src: "{{ item }}"

dest: /root/.aws

mode: 0600

owner: root

loop:

- config

- credentials

- name: download goofys

get_url:

url: https://github.com/kahing/goofys/releases/latest/download/goofys

dest: /usr/local/bin

mode: 0755

owner: me

- name: umount

command: sudo umount /kube/iccube

ignore_errors: yes

- name: mount

command: sudo /usr/local/bin/goofys -o allow_other --file-mode=0777 --dir-mode=0777 s3cubebucket /kube/iccube

Speed testing

The Goofys filesystem being mounting, we can go to a node directly and see what are the read/write speeds. We are going to simply use dd to create a 1G file and then read from it

The steps used for the speed testing are mostly taken and adapted from the following article.

WRITE

$ sync; dd if=/dev/zero of=tempfile bs=1M count=1024; sync

1024+0 records in

1024+0 records out

1073741824 bytes (1.1 GB) copied, 10.405 s, 103 MB/s

READ biased with recent FS caching

$ dd if=tempfile of=/dev/null bs=1M count=1024

1024+0 records in

1024+0 records out

1073741824 bytes (1.1 GB) copied, 0.301232 s, 3.6 GB/s

READ after cleaning cache

$ sudo /sbin/sysctl -w vm.drop_caches=3

vm.drop_caches = 3

$ dd if=tempfile of=/dev/null bs=1M count=1024

1024+0 records in

1024+0 records out

1073741824 bytes (1.1 GB) copied, 10.4693 s, 103 MB/s

cleanup

Let’s not forget to remove the temporary file created during the speed test.

rm tempfile

hdparm did not work for 3 mounts

I briefly tried to use hdparm for measuring speed, in the hope of comparing with the results from dd, but without success, seems like this is not supported by fuse.

$ sudo hdparm -Tt /kube/iccube

/kube/iccube:

read() failed: Is a directory

BLKGETSIZE failed: Inappropriate ioctl for device

BLKFLSBUF failed: Inappropriate ioctl for device

Use Goofys with Kubernetes to deploy iccube

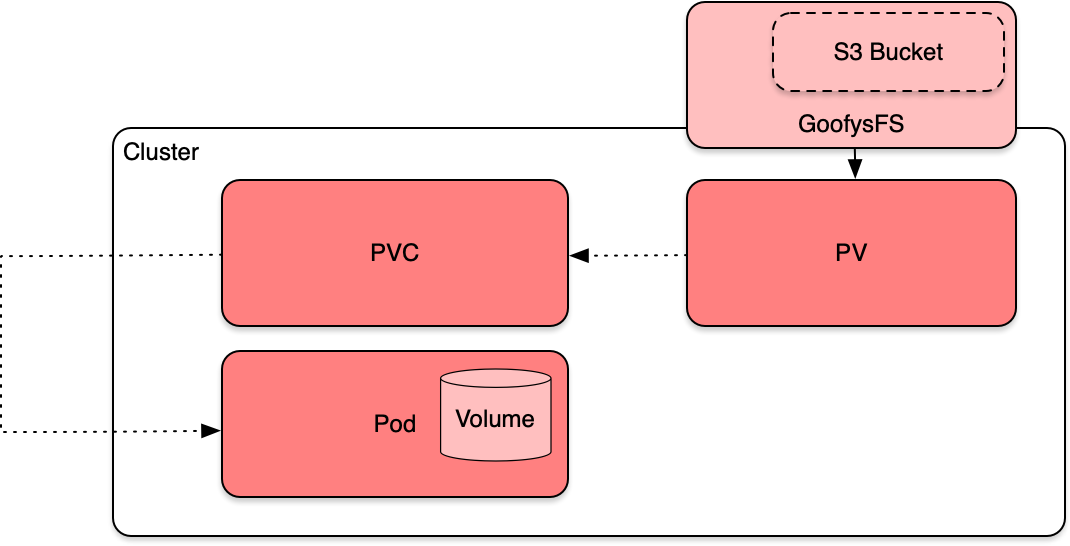

A quick overview of the target design is shown in the diagram below:

The binding between all the boxes will be done when the pod has been properly scheduled to be deployed on the cluster. Meaning. We need to create a pod so that the PVC/PV are bind-ed properly.

So, remembering our goal here is to deploy Iccube pods on our Kubernetes cluster using the S3 bucket as persistence.

At first, It is not very obvious which and how to prepare all the yaml files for a proper deployment. Let’s list all the 7 files first:

- namespace.yml

- local-storage.yml

- pv.yml and pv2.yml

- pvc.yml and pvc2.yml

- deployment.yml

And go over each file usage.

namespace.yml

For the binding to work between the container and the pv/pvc we need the all set to be within the same namespace. We will put all of it in the iccube namespace.

apiVersion: v1

kind: Namespace

metadata:

name: iccube

local-storage.yml

This is the storage class that will be used in the pv/pvc definitions. There is close to no space for variation here,, apart from the mount option.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: my-local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

mountOptions:

- debug

pv.yml

This is the Persistent Volume definition. Two things to note here:

- it maps the path to the goofys mount (or within the goofys mount) and ,

- we need to specify the node affinity on nodes that have the goofys mount setup.

apiVersion: v1

kind: PersistentVolume

metadata:

name: my-volume

labels:

type: local

spec:

storageClassName: my-local-storage

capacity:

storage: 50Gi

accessModes:

- ReadWriteMany

hostPath:

path: "/kube/iccube/data"

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- iwate

- miyagi

pvc.yml

Here again you need to define the storage size, but the check is only there to see if the PVC size is lower than the PV size, and does not affect the actual goofys mount. Note two other things here:

- we need ReadWriteMany as the accessMode to make this accessible to other pods

- the namespace needs to match the namespace of the pod/deployment that comes after, so here iccube

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: iccube-pvc

namespace: iccube

spec:

storageClassName: my-local-storage

accessModes:

- ReadWriteMany

resources:

requests:

storage: 3Gi

deployment.yml

Finally, this is our deployment file to deploy 2 pods for iccube. We skipped short the content of the iccube-pvc2 (along pv2) so we will let the reader fill in the (easy) blanks.

apiVersion: apps/v1

kind: Deployment

metadata:

name: iccube

namespace: iccube

spec:

selector:

matchLabels:

run: iccube

replicas: 2

template:

metadata:

labels:

run: iccube

spec:

volumes:

- name: iccube-volume

persistentVolumeClaim:

claimName: iccube-pvc

- name: iccube-volume2

persistentVolumeClaim:

claimName: iccube-pvc2

containers:

- name: iccube

image: ic3software/iccube:8.2.2-chromium

ports:

- containerPort: 8282

volumeMounts:

- mountPath: "/home/ic3"

mountPropagation: HostToContainer

name: iccube-volume

- mountPath: "/opt/icCube/bin"

mountPropagation: HostToContainer

name: iccube-volume2

service.yml

We also need a service definition to export the pod to the outside world. Here is a simple one use NodePort to expose the http port of iccube.

apiVersion: v1

kind: Service

metadata:

name: iccube

namespace: iccube

spec:

type: NodePort

sessionAffinity: ClientIP

selector:

matchLabels:

run: iccube

ports:

- port: 8282

targetPort: 8282

nodePort: 30000

protocol: TCP

selector:

run: iccube

Check the iccube pods are running

Deploying the files one by one in the following order:

kubectl apply -f local-storage.yml

kubectl apply -f pv.yml

kubectl apply -f pv2.yml

kubectl apply -f pvc.yml

kubectl apply -f pvc2.yml

kubectl apply -f deployment.yml

kubectl apply -f service.yml

We can see if the pvc are bind-ed properly:

kubectl get pvc -n iccube -o wide

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE

iccube-pvc Bound my-volume 50Gi RWX my-local-storage 41h Filesystem

iccube-pvc2 Bound my-volume2 50Gi RWX my-local-storage 41h Filesystem

And if they are, the pods should be started:

kubectl get pods -n iccube -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

iccube-59ffff668f-g68h6 1/1 Running 0 40h 10.233.121.70 miyagi <none> <none>

iccube-59ffff668f-rmsbv 1/1 Running 0 41h 10.233.76.247 iwate <none> <none>

The pods can only be started on the cluster nodes specified in the pv’s nodeAffinity section.

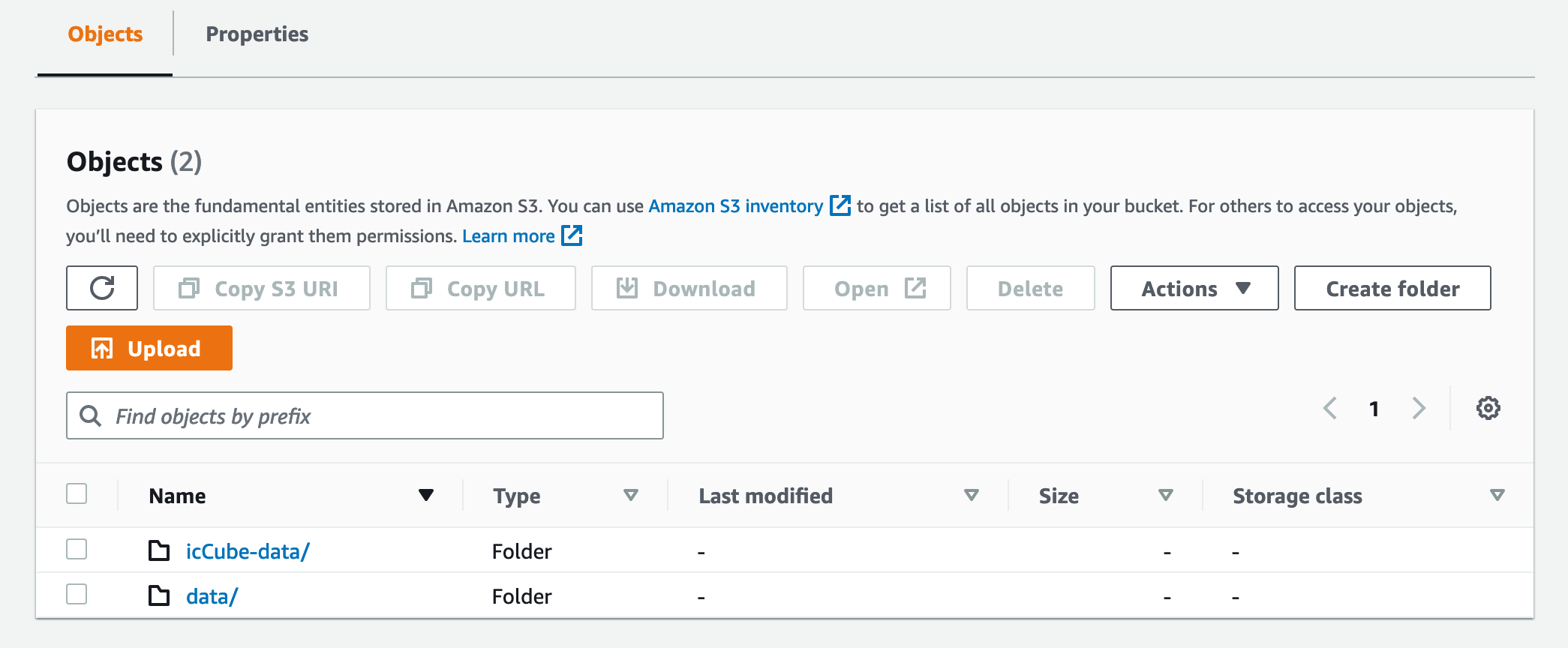

The S3 bucket contains the iccube files:

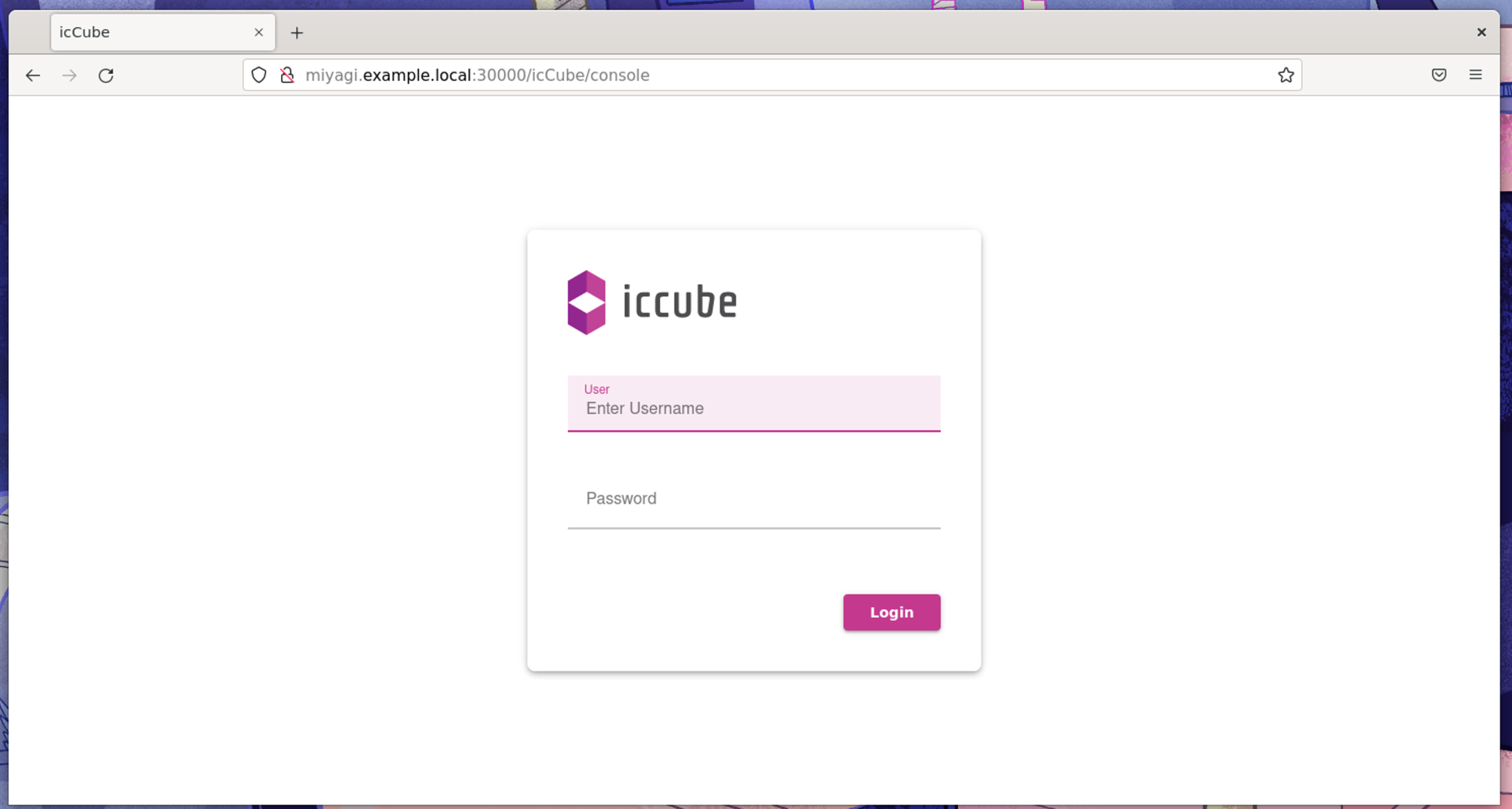

And if you are on the same network you can now access iccube via the 30000 port specified in NodePort.

Going further

There are a few things you can check further. Deleting the pods (or creating new ones) does not delete the data in the S3 bucket and so the data is properly propagated.

The read/write speed are very decent.

#articles